Building a Machine Learning Application With Cloudera Data Science Workbench And Operational Database, Part 3: Productionization of ML models

Cloudera

JANUARY 20, 2021

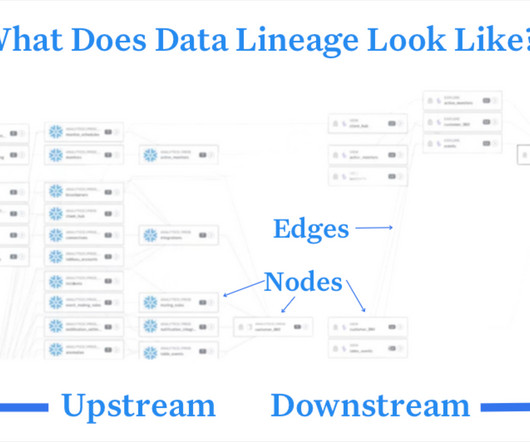

In this last installment, we’ll discuss a demo application that uses PySpark.ML to make a classification model based off of training data stored in both Cloudera’s Operational Database (powered by Apache HBase) and Apache HDFS. Training Data in HBase and HDFS. Below is a simple screen recording of the demo application.

Let's personalize your content