7 Essential Data Cleaning Best Practices

Monte Carlo

APRIL 1, 2024

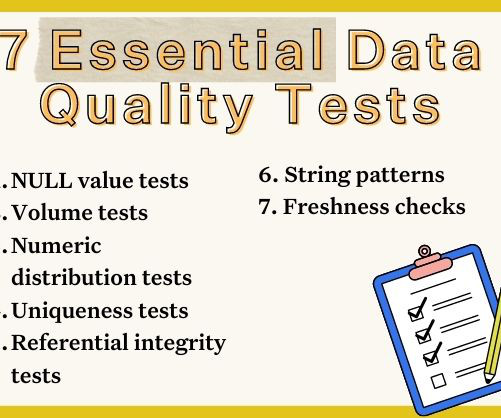

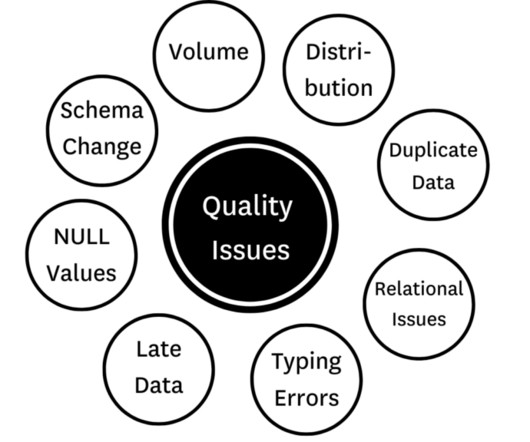

Data cleaning is an essential step to ensure your data is safe from the adage “garbage in, garbage out.” Because effective data cleaning best practices fix and remove incorrect, inaccurate, corrupted, duplicate, or incomplete data in your dataset; data cleaning removes the garbage before it enters your pipelines.

Let's personalize your content