Snowflake’s New Python API Empowers Data Engineers to Build Modern Data Pipelines with Ease

Snowflake

APRIL 17, 2024

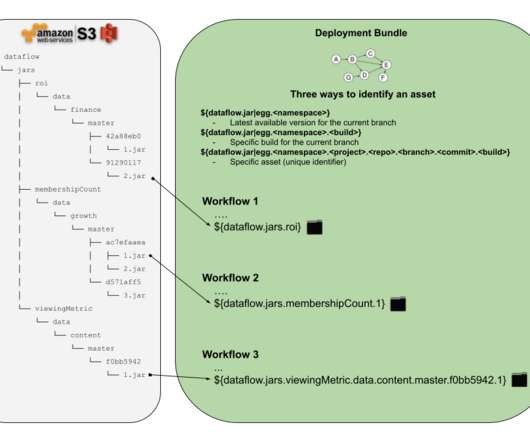

Since the previous Python connector API mostly communicated via SQL, it also hindered the ability to manage Snowflake objects natively in Python, restricting data pipeline efficiency and the ability to complete complex tasks. To get started, explore the comprehensive API documentation , which will guide you through every step.

Let's personalize your content