Unpacking The Seven Principles Of Modern Data Pipelines

Data Engineering Podcast

AUGUST 13, 2023

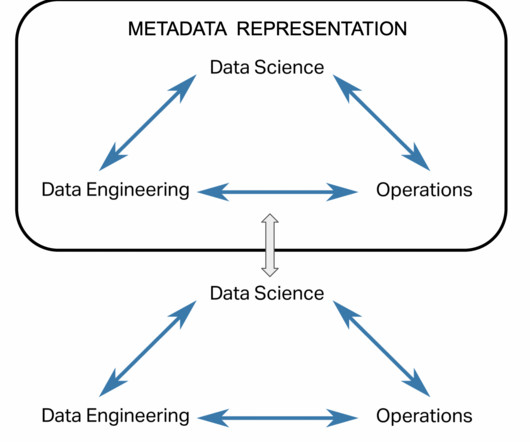

Summary Data pipelines are the core of every data product, ML model, and business intelligence dashboard. The folks at Rivery distilled the seven principles of modern data pipelines that will help you stay out of trouble and be productive with your data. Closing Announcements Thank you for listening!

Let's personalize your content