Updates, Inserts, Deletes: Comparing Elasticsearch and Rockset for Real-Time Data Ingest

Rockset

OCTOBER 11, 2022

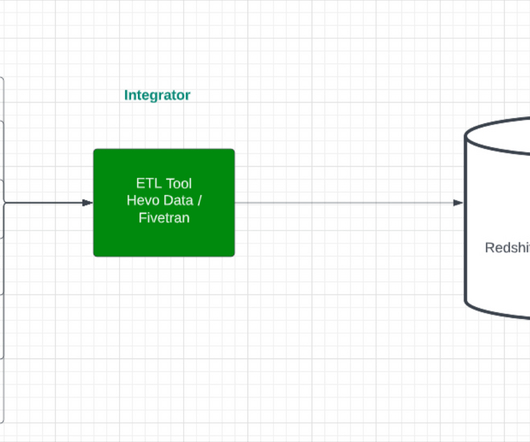

Introduction Managing streaming data from a source system, like PostgreSQL, MongoDB or DynamoDB, into a downstream system for real-time analytics is a challenge for many teams. For a system like Elasticsearch , engineers need to have in-depth knowledge of the underlying architecture in order to efficiently ingest streaming data.

Let's personalize your content