The Five Use Cases in Data Observability: Ensuring Data Quality in New Data Source

DataKitchen

MAY 10, 2024

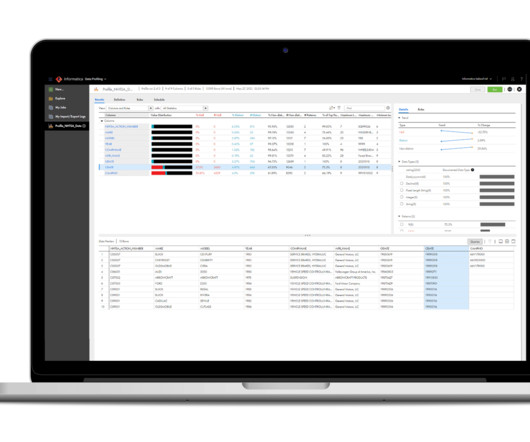

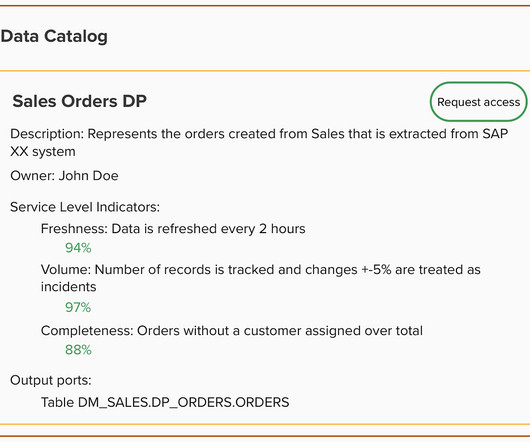

This not only enhances the accuracy and utility of the data but also significantly reduces the time and effort typically required for data cleansing. DataKitchen’s DataOps Observability stands out by providing: Intelligent Profiling: Automatic in-database profiling that adapts to the data’s unique characteristics.

Let's personalize your content