Intrinsic Data Quality: 6 Essential Tactics Every Data Engineer Needs to Know

Monte Carlo

JANUARY 10, 2024

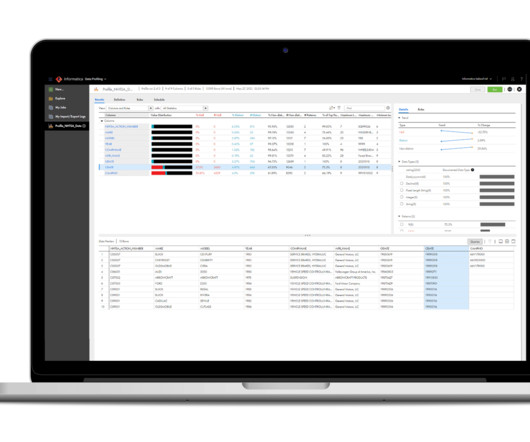

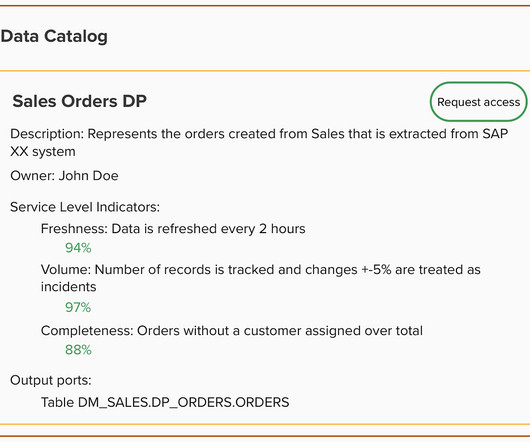

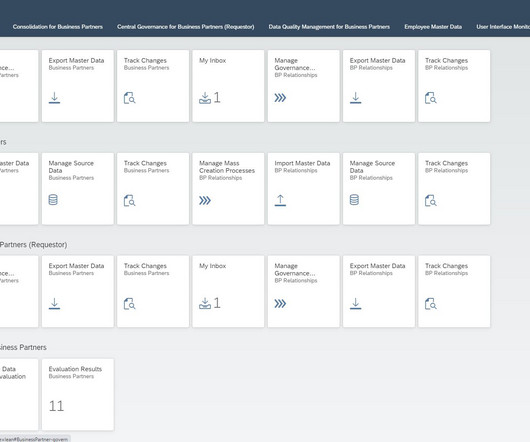

In this article, we present six intrinsic data quality techniques that serve as both compass and map in the quest to refine the inner beauty of your data. Data Profiling 2. Data Cleansing 3. Data Validation 4. Data Auditing 5. Data Governance 6. This is known as data governance.

Let's personalize your content