Top 10 Data Science Websites to learn More

Knowledge Hut

FEBRUARY 29, 2024

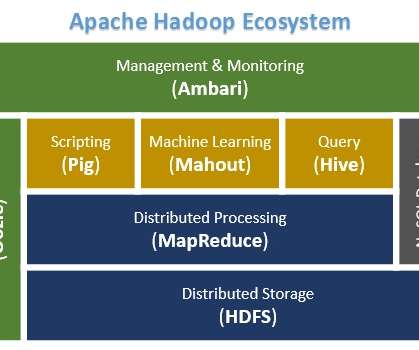

Currently, numerous resources are being created on the internet consisting of data science websites, data analytics websites, data science portfolio websites, data scientist portfolio websites and so on. So, having the right knowledge of tools and technology is important for handling such data.

Let's personalize your content