Event-Driven Microservices with Python and Apache Kafka

Confluent

SEPTEMBER 21, 2022

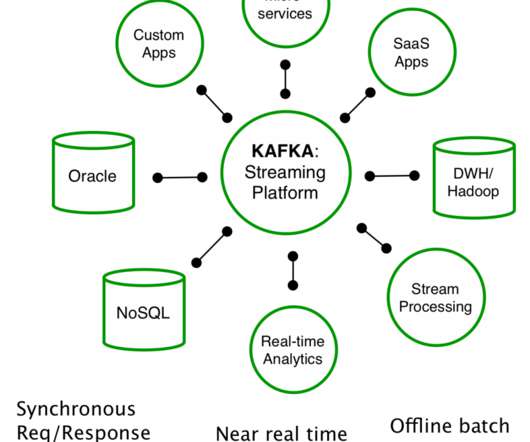

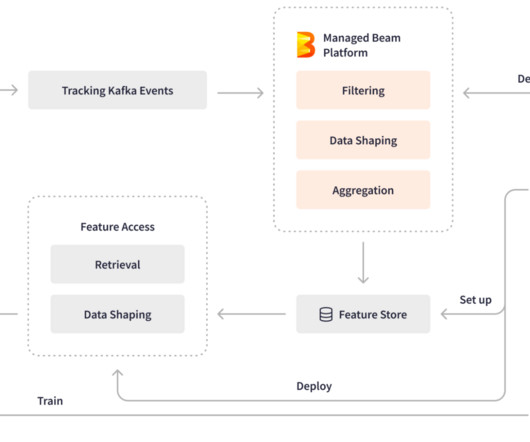

A deep dive into how microservices work, why it’s the backbone of real-time applications, and how to build event-driven microservices applications with Python and Kafka.

Let's personalize your content