An Engineering Guide to Data Quality - A Data Contract Perspective - Part 2

Data Engineering Weekly

MAY 16, 2023

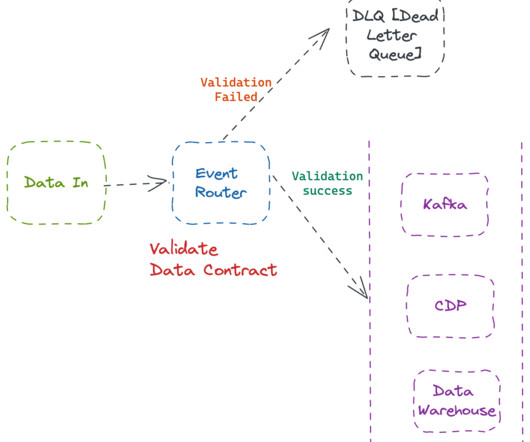

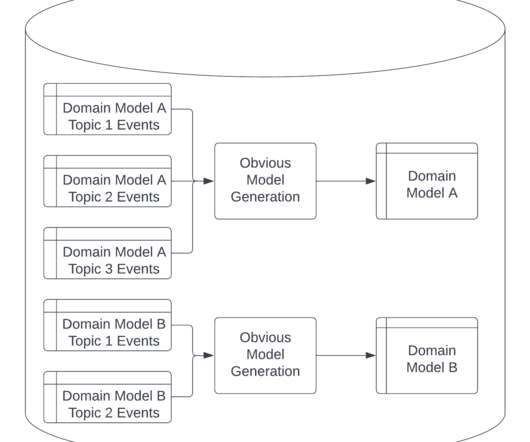

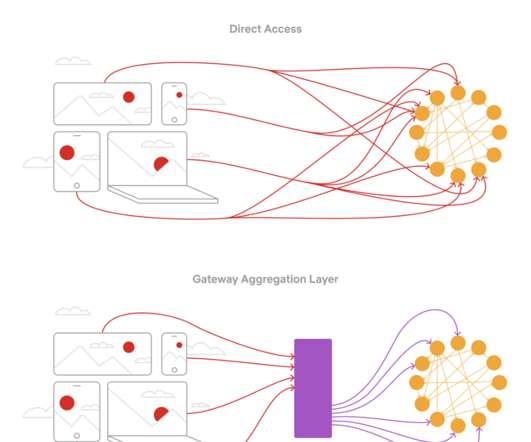

In the first part of this series, we talked about design patterns for data creation and the pros & cons of each system from the data contract perspective. In the second part, we will focus on architectural patterns to implement data quality from a data contract perspective. Why is Data Quality Expensive? How to Fix It?

Let's personalize your content