Data Testing Tools: Key Capabilities and 6 Tools You Should Know

Databand.ai

AUGUST 30, 2023

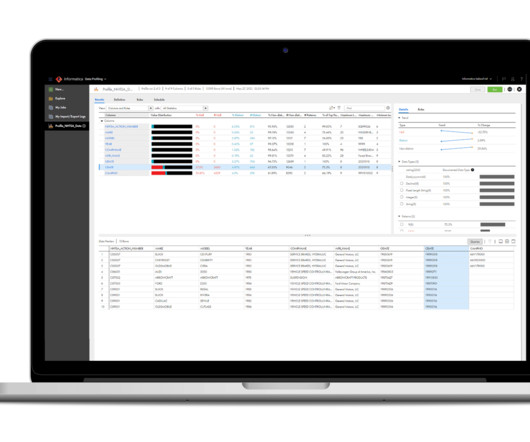

Data Testing Tools: Key Capabilities and 6 Tools You Should Know Helen Soloveichik August 30, 2023 What Are Data Testing Tools? Data testing tools are software applications designed to assist data engineers and other professionals in validating, analyzing, and maintaining data quality.

Let's personalize your content