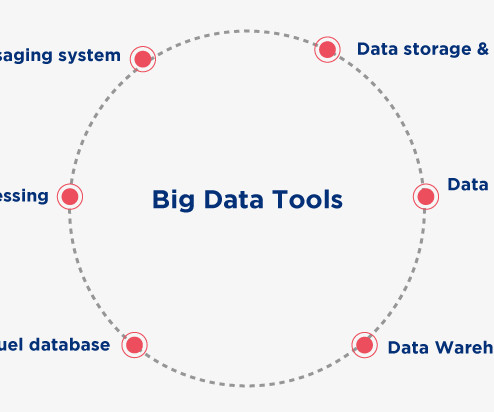

Top Big Data Tools You Need to Know in 2023

Knowledge Hut

DECEMBER 27, 2023

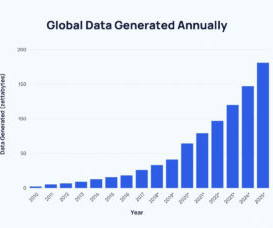

Volume : Refers to the massive data that organizations collect from various sources like transactions, smart devices (IoTs), videos, images, audio, social media and industrial equipment just to name a few. Types of Big Data 1. Structured (any data that can be stored, accessed and processed in a fixed format) Source - Guru99.com

Let's personalize your content