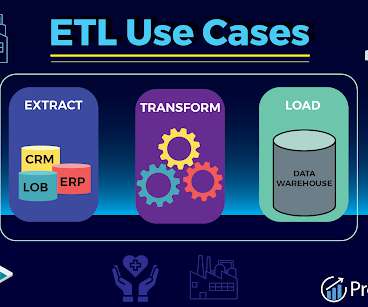

Top ETL Use Cases for BI and Analytics:Real-World Examples

ProjectPro

JANUARY 27, 2023

However, the vast volume of data will overwhelm you if you start looking at historical trends. The time-consuming method of data collection and transformation can be eliminated using ETL. You can analyze and optimize your investment strategy using high-quality structured data.

Let's personalize your content