What is AWS Data Pipeline?

ProjectPro

JUNE 16, 2022

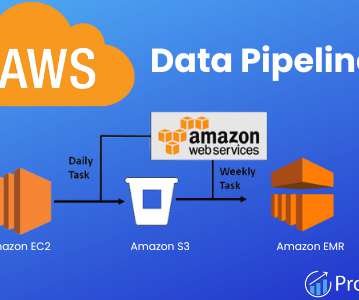

An AWS data pipeline helps businesses move and unify their data to support several data-driven initiatives. It enables flow from a data lake to an analytics database or an application to a data warehouse. AWS CLI is an excellent tool for managing Amazon Web Services. What is an AWS Data Pipeline?

Let's personalize your content