What is Data Ingestion? Types, Frameworks, Tools, Use Cases

Knowledge Hut

APRIL 25, 2023

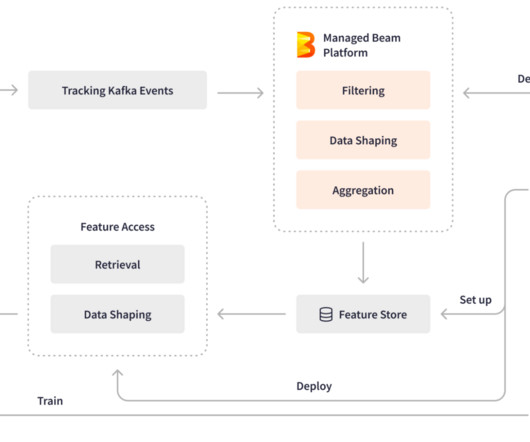

An end-to-end Data Science pipeline starts from business discussion to delivering the product to the customers. One of the key components of this pipeline is Data ingestion. It helps in integrating data from multiple sources such as IoT, SaaS, on-premises, etc., What is Data Ingestion?

Let's personalize your content