15+ Must Have Data Engineer Skills in 2023

Knowledge Hut

NOVEMBER 28, 2023

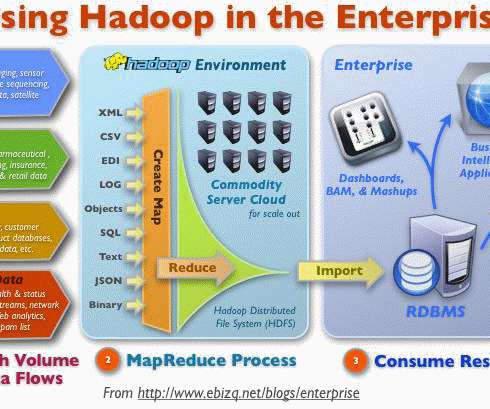

With a plethora of new technology tools on the market, data engineers should update their skill set with continuous learning and data engineer certification programs. What do Data Engineers Do? Big resources still manage file data hierarchically using Hadoop's open-source ecosystem.

Let's personalize your content