A Dive into the Basics of Big Data Storage with HDFS

Analytics Vidhya

FEBRUARY 6, 2023

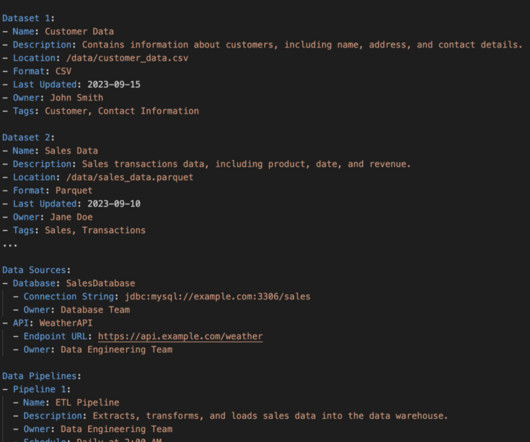

Introduction HDFS (Hadoop Distributed File System) is not a traditional database but a distributed file system designed to store and process big data. It provides high-throughput access to data and is optimized for […] The post A Dive into the Basics of Big Data Storage with HDFS appeared first on Analytics Vidhya.

Let's personalize your content