Data Warehouse vs Big Data

Knowledge Hut

APRIL 23, 2024

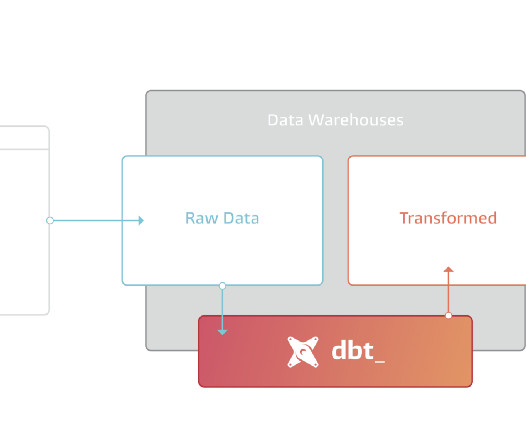

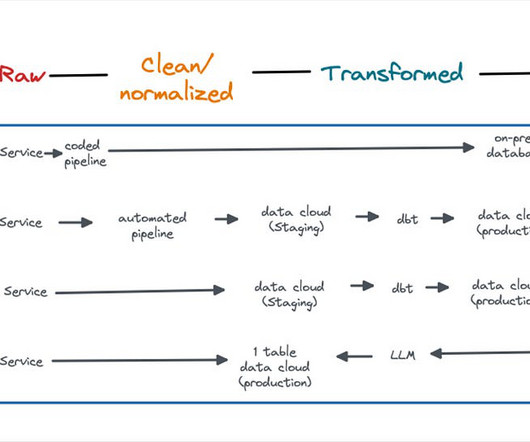

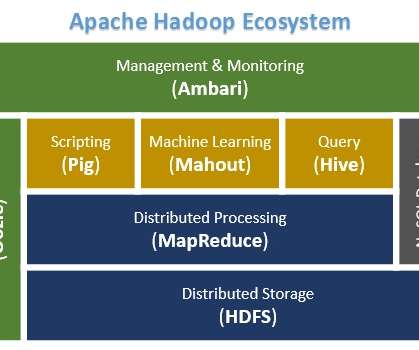

Two popular approaches that have emerged in recent years are data warehouse and big data. While both deal with large datasets, but when it comes to data warehouse vs big data, they have different focuses and offer distinct advantages.

Let's personalize your content