6 Pillars of Data Quality and How to Improve Your Data

Databand.ai

MAY 30, 2023

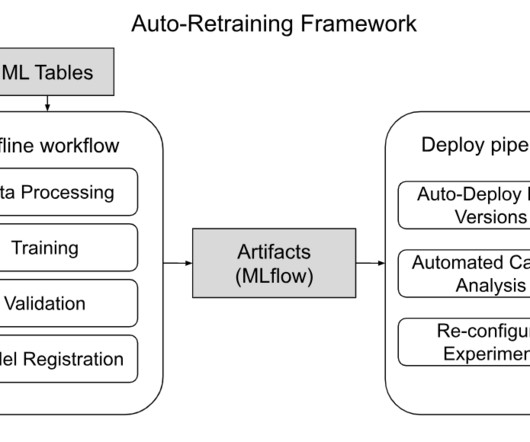

High-quality data is essential for making well-informed decisions, performing accurate analyses, and developing effective strategies. Data quality can be influenced by various factors, such as data collection methods, data entry processes, data storage, and data integration.

Let's personalize your content