RAG vs Fine Tuning: How to Choose the Right Method

Monte Carlo

MAY 30, 2024

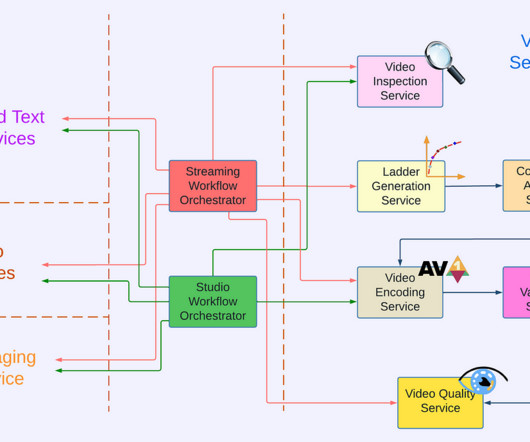

Retrieval augmented generation (RAG) is an architecture framework introduced by Meta in 2020 that connects your large language model (LLM) to a curated, dynamic database. Data retrieval: Based on the query, the RAG system searches the database to find relevant data. A RAG flow in Databricks can be visualized like this.

Let's personalize your content