How to Build a Data Pipeline in 6 Steps

Ascend.io

JANUARY 2, 2024

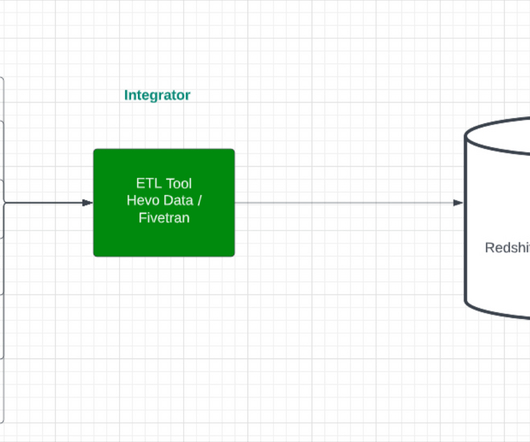

But let’s be honest, creating effective, robust, and reliable data pipelines, the ones that feed your company’s reporting and analytics, is no walk in the park. From building the connectors to ensuring that data lands smoothly in your reporting warehouse, each step requires a nuanced understanding and strategic approach.

Let's personalize your content