A Guide to Seamless Data Fabric Implementation

Striim

FEBRUARY 5, 2024

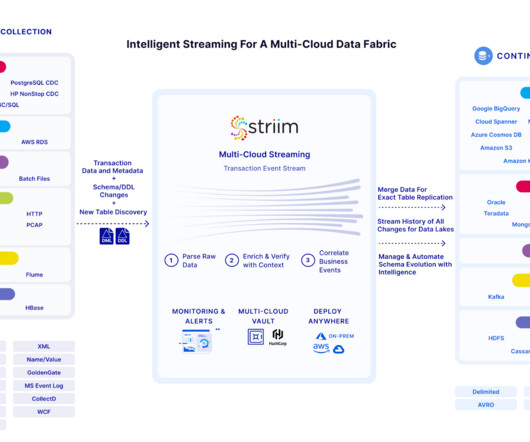

Data Fabric is a comprehensive data management approach that goes beyond traditional methods , offering a framework for seamless integration across diverse sources. By upholding data quality, organizations can trust the information they rely on for decision-making, fostering a data-driven culture built on dependable insights.

Let's personalize your content