8 Data Quality Monitoring Techniques & Metrics to Watch

Databand.ai

AUGUST 30, 2023

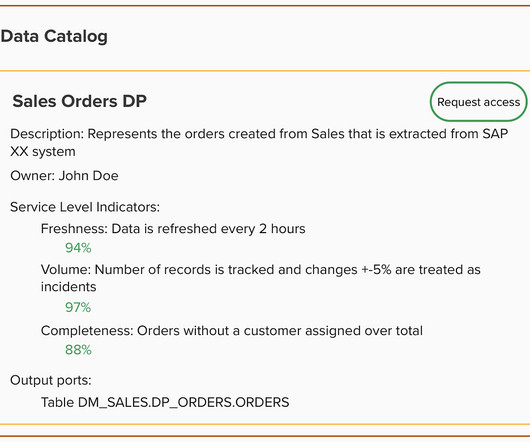

Validity: Adherence to predefined formats, rules, or standards for each attribute within a dataset. Uniqueness: Ensuring that no duplicate records exist within a dataset. Integrity: Maintaining referential relationships between datasets without any broken links.

Let's personalize your content