Exploring The Evolution And Adoption of Customer Data Platforms and Reverse ETL

Data Engineering Podcast

NOVEMBER 4, 2021

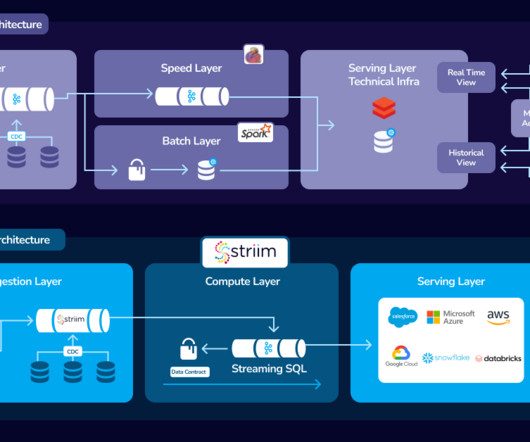

Summary The precursor to widespread adoption of cloud data warehouses was the creation of customer data platforms. Acting as a centralized repository of information about how your customers interact with your organization they drove a wave of analytics about how to improve products based on actual usage data.

Let's personalize your content