Creating a Data Pipeline with Spark, Google Cloud Storage and Big Query

Towards Data Science

MARCH 6, 2023

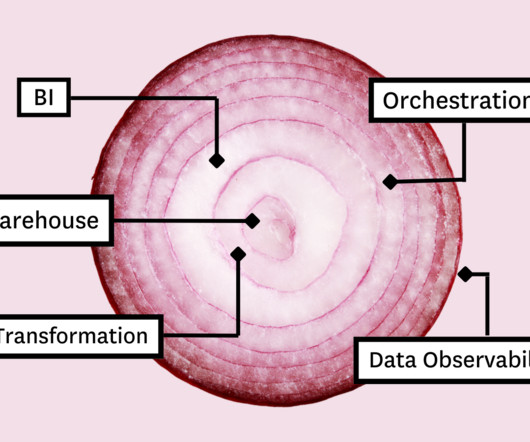

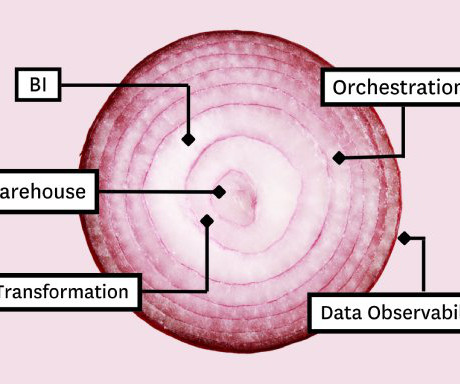

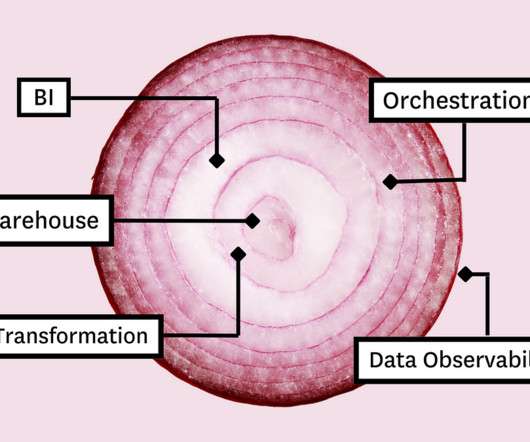

On-premise and cloud working together to deliver a data product Photo by Toro Tseleng on Unsplash Developing a data pipeline is somewhat similar to playing with lego, you mentalize what needs to be achieved (the data requirements), choose the pieces (software, tools, platforms), and fit them together.

Let's personalize your content