What is data processing analyst?

Edureka

AUGUST 2, 2023

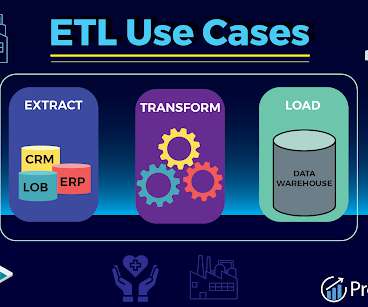

What does a Data Processing Analysts do ? A data processing analyst’s job description includes a variety of duties that are essential to efficient data management. They must be well-versed in both the data sources and the data extraction procedures.

Let's personalize your content