Understanding the 4 Fundamental Components of Big Data Ecosystem

U-Next

SEPTEMBER 23, 2022

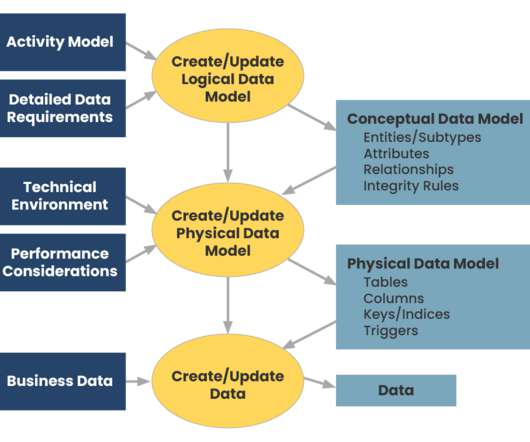

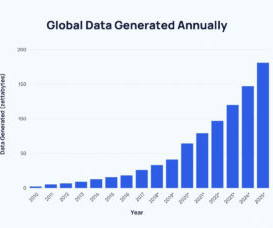

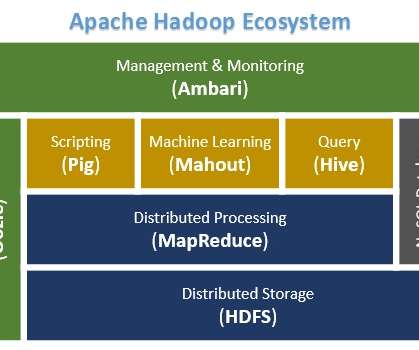

Previously, organizations dealt with static, centrally stored data collected from numerous sources, but with the advent of the web and cloud services, cloud computing is fast supplanting the traditional in-house system as a dependable, scalable, and cost-effective IT solution. System of Grading. Prediction of a Career.

Let's personalize your content