What is a Data Pipeline?

Grouparoo

OCTOBER 26, 2021

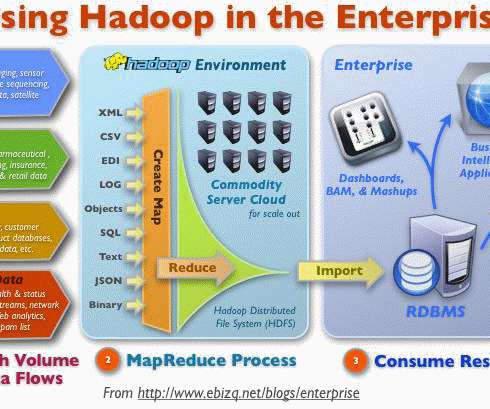

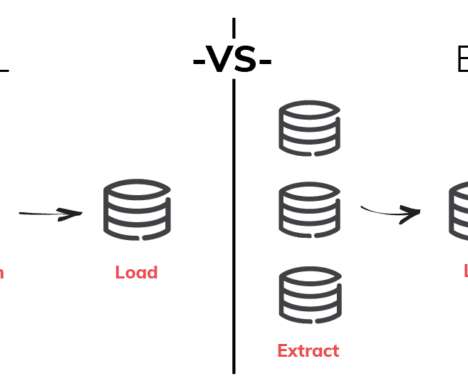

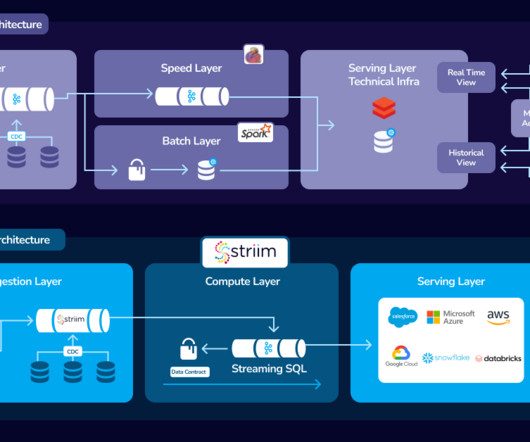

An ETL data pipeline extracts raw data from a source system, transforms it into a structure that can be processed by a target system, and loads the transformed data into the target, usually a database or data warehouse While the terms “data pipeline” and ETL are often used interchangeably, there are some key differences between the two.

Let's personalize your content