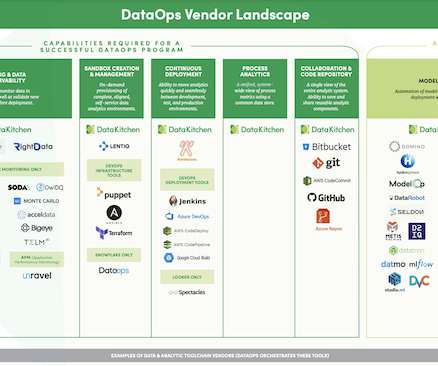

DataOps Framework: 4 Key Components and How to Implement Them

Databand.ai

AUGUST 30, 2023

The DataOps framework is a set of practices, processes, and technologies that enables organizations to improve the speed, accuracy, and reliability of their data management and analytics operations. The core philosophy of DataOps is to treat data as a valuable asset that must be managed and processed efficiently.

Let's personalize your content