Modern Data Engineering

Towards Data Science

NOVEMBER 4, 2023

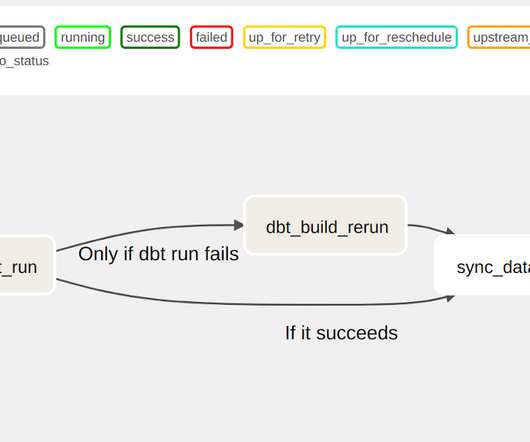

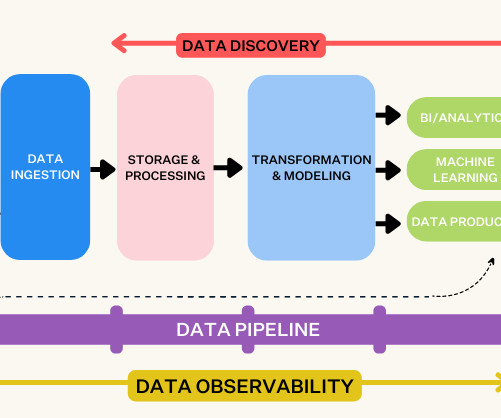

We will discuss how to use this knowledge to power advanced analytics pipelines and operational excellence. Does your DE work well enough to fuel advanced data pipelines and Business intelligence (BI)? Are your data pipelines efficient? Datalake example. What is it? Image by author.

Let's personalize your content