Data Engineering Weekly Radio #120

Data Engineering Weekly

MARCH 11, 2023

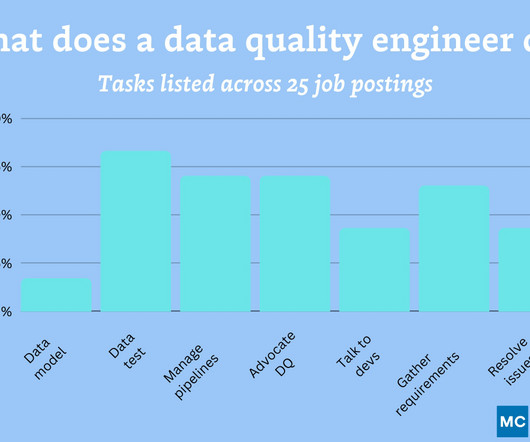

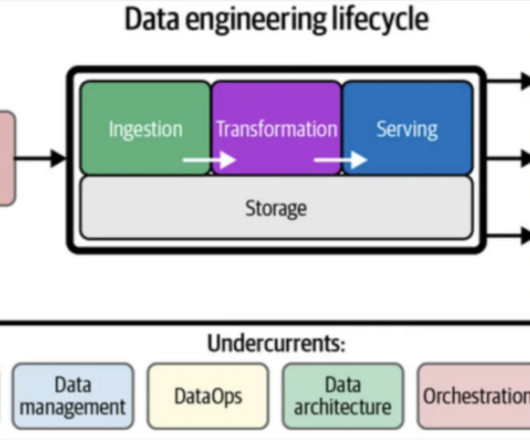

We are back in our Data Engineering Weekly Radio for edition #120. We will take 2 or 3 articles from each week's Data Engineering Weekly edition and go through an in-depth analysis. We discuss an article by Colin Campbell highlighting the need for a data catalog and the market scope for data contract solutions.

Let's personalize your content