Big Data Technologies that Everyone Should Know in 2024

Knowledge Hut

APRIL 25, 2024

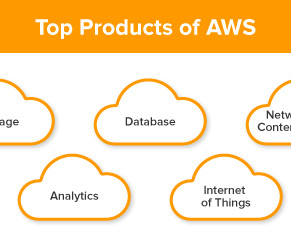

Big data is a term that refers to the massive volume of data that organizations generate every day. In the past, this data was too large and complex for traditional data processing tools to handle. There are a variety of big data processing technologies available, including Apache Hadoop, Apache Spark, and MongoDB.

Let's personalize your content