What is a Data Pipeline?

Grouparoo

OCTOBER 26, 2021

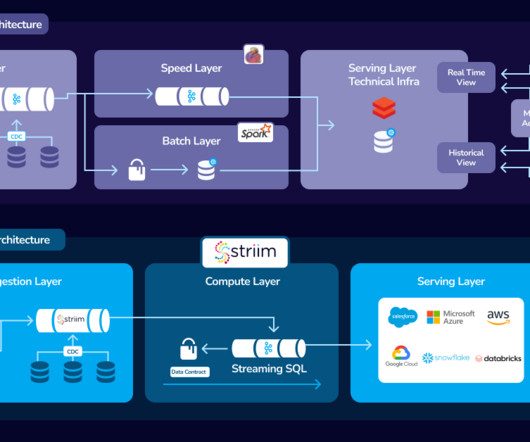

As a result, data has to be moved between the source and destination systems and this is usually done with the aid of data pipelines. What is a Data Pipeline? A data pipeline is a set of processes that enable the movement and transformation of data from different sources to destinations.

Let's personalize your content