An Engineering Guide to Data Quality - A Data Contract Perspective - Part 2

Data Engineering Weekly

MAY 16, 2023

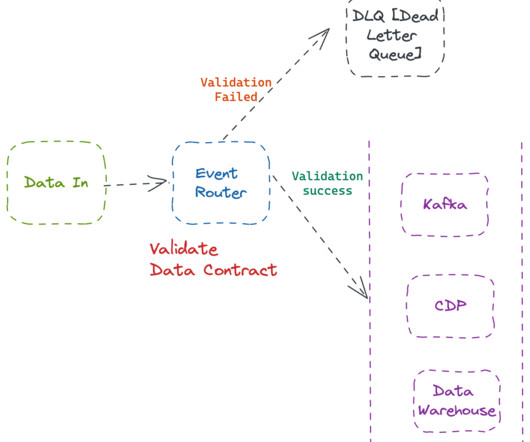

I won’t bore you with the importance of data quality in the blog. Instead, Let’s examine the current data pipeline architecture and ask why data quality is expensive. Instead of looking at the implementation of the data quality frameworks, Let's examine the architectural patterns of the data pipeline.

Let's personalize your content