What is a Data Pipeline?

Grouparoo

OCTOBER 26, 2021

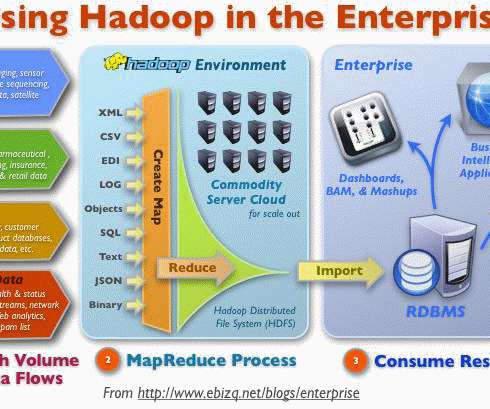

A data pipeline typically consists of three main elements: an origin, a set of processing steps, and a destination. Data pipelines are key in enabling the efficient transfer of data between systems for data integration and other purposes. Thus, ETL systems are a subset of the broader term, “data pipeline”.

Let's personalize your content