Data Integration: Approaches, Techniques, Tools, and Best Practices for Implementation

AltexSoft

SEPTEMBER 10, 2021

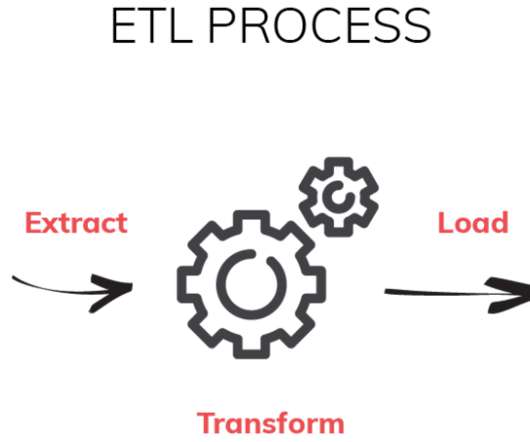

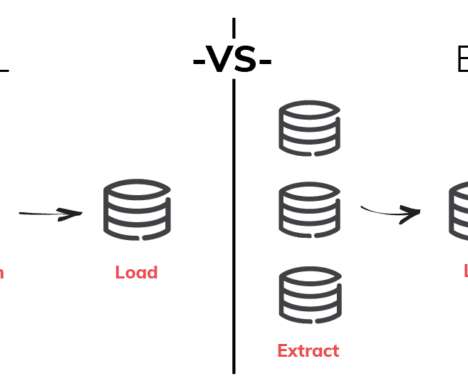

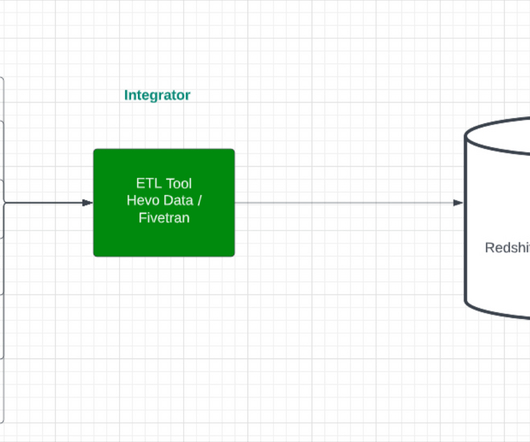

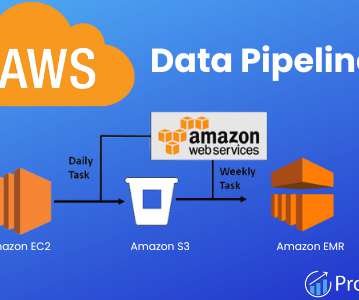

What is data integration and why is it important? Data integration is the process of taking data from multiple disparate internal and external sources and putting it in a single location (e.g., data warehouse ) to achieve a unified view of collected data. Key types of data integration.

Let's personalize your content