Setting up Data Lake on GCP using Cloud Storage and BigQuery

Analytics Vidhya

FEBRUARY 25, 2023

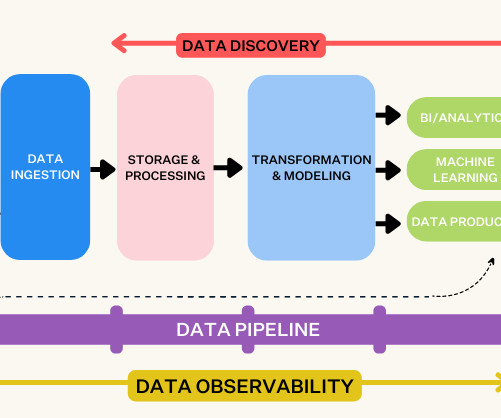

Introduction A data lake is a centralized and scalable repository storing structured and unstructured data. The need for a data lake arises from the growing volume, variety, and velocity of data companies need to manage and analyze.

Let's personalize your content