Making dbt Cloud API calls using dbt-cloud-cli

dbt Developer Hub

MAY 2, 2022

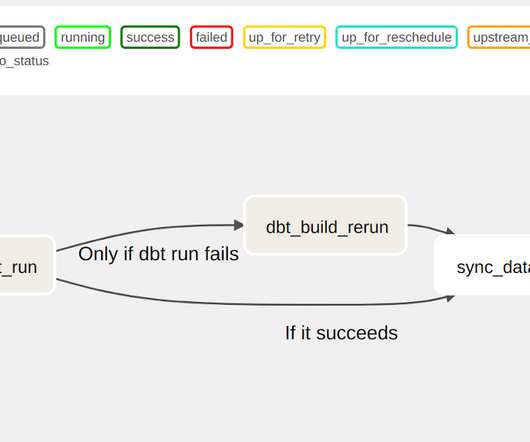

dbt Cloud is a hosted service that many organizations use for their dbt deployments. cron schedule, API trigger), the jobs generate various artifacts that contain valuable metadata related to the dbt project and the run results. What is dbt-cloud-cli and why should you use it?

Let's personalize your content