Top 10 Hadoop Tools to Learn in Big Data Career 2024

Knowledge Hut

DECEMBER 21, 2023

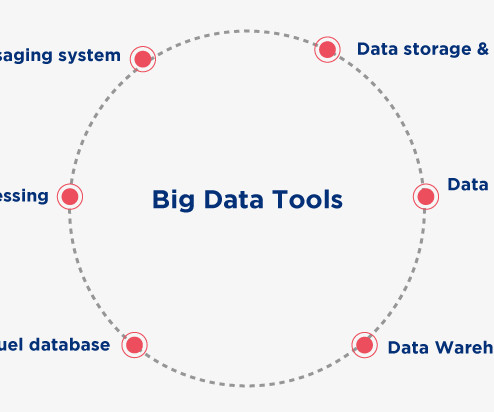

In the present-day world, almost all industries are generating humongous amounts of data, which are highly crucial for the future decisions that an organization has to make. This massive amount of data is referred to as “big data,” which comprises large amounts of data, including structured and unstructured data that has to be processed.

Let's personalize your content