Hadoop Salary: A Complete Guide from Beginners to Advance

Knowledge Hut

JULY 27, 2023

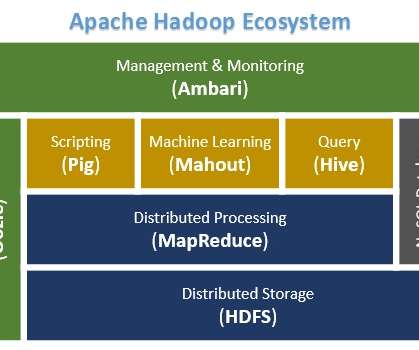

They are skilled in working with tools like MapReduce, Hive, and HBase to manage and process huge datasets, and they are proficient in programming languages like Java and Python. Using the Hadoop framework, Hadoop developers create scalable, fault-tolerant Big Data applications. What do they do?

Let's personalize your content