Deployment of Exabyte-Backed Big Data Components

LinkedIn Engineering

DECEMBER 19, 2023

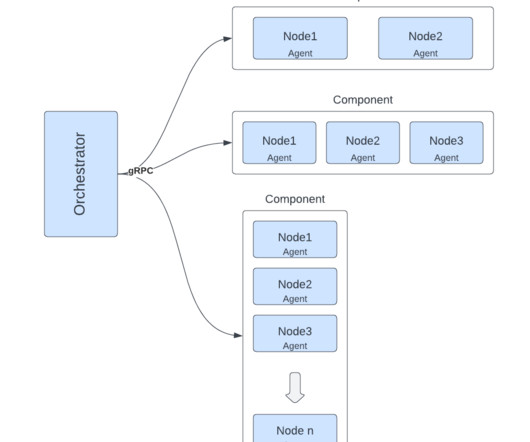

Our RU framework ensures that our big data infrastructure, which consists of over 55,000 hosts and 20 clusters holding exabytes of data, is deployed and updated smoothly by minimizing downtime and avoiding performance degradation. The data is accessible through Hive and Trino, allowing queries for different dates and timestamps.

Let's personalize your content