Data Pipeline- Definition, Architecture, Examples, and Use Cases

ProjectPro

DECEMBER 7, 2021

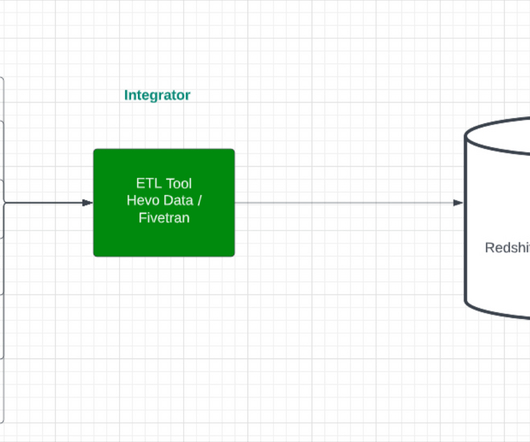

A pipeline may include filtering, normalizing, and data consolidation to provide desired data. It can also consist of simple or advanced processes like ETL (Extract, Transform and Load) or handle training datasets in machine learning applications. Data ingestion methods gather and bring data into a data processing system.

Let's personalize your content