Building Real-time Machine Learning Foundations at Lyft

Lyft Engineering

JUNE 28, 2023

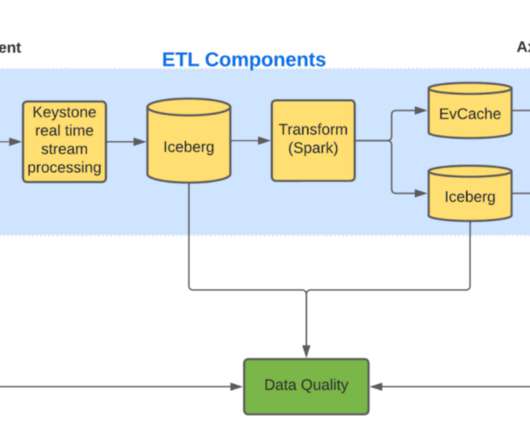

While several teams were using streaming data in their Machine Learning (ML) workflows, doing so was a laborious process, sometimes requiring weeks or months of engineering effort. On the flip side, there was a substantial appetite to build real-time ML systems from developers at Lyft.

Let's personalize your content