Big Data Technologies that Everyone Should Know in 2024

Knowledge Hut

APRIL 25, 2024

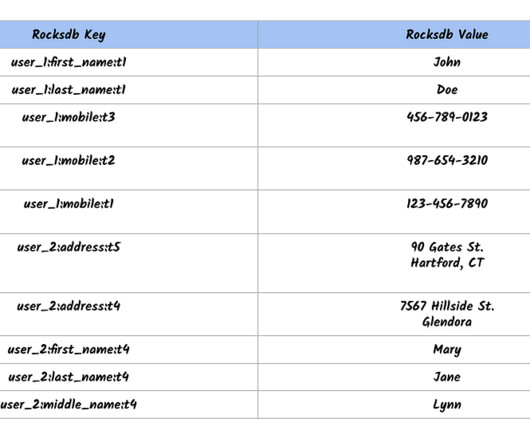

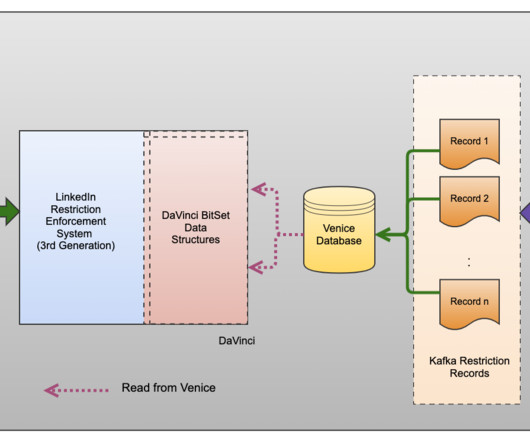

NoSQL databases are designed for scalability and flexibility, making them well-suited for storing big data. The most popular NoSQL database systems include MongoDB, Cassandra, and HBase. Big data technologies can be categorized into four broad categories: batch processing, streaming, NoSQL databases, and data warehouses.

Let's personalize your content