From Zero to ETL Hero-A-Z Guide to Become an ETL Developer

ProjectPro

FEBRUARY 8, 2023

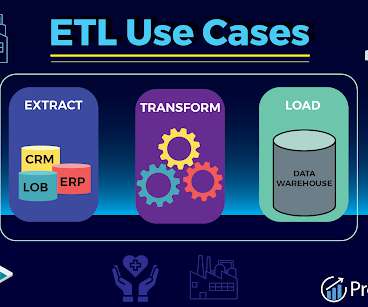

ETL Developer Roles and Responsibilities Below are the roles and responsibilities of an ETL developer: Extracting data from various sources such as databases, flat files, and APIs. Data Warehousing Knowledge of data cubes, dimensional modeling, and data marts is required. PREVIOUS NEXT <

Let's personalize your content