5 Reasons to Use APIs to Unleash Your Data

Precisely

FEBRUARY 1, 2024

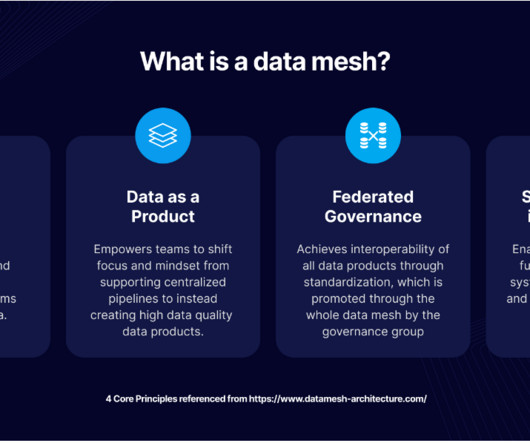

“Data is a key ingredient in deeper insights, more informed decisions, and clearer execution. Advanced firms establish practices to ensure data quality, build data fabrics, and apply insights where they matter most. Data quality and contextual depth are essential elements of an effective data-driven strategy.

Let's personalize your content