Case Study: Matter Uses Rockset to Bring AI-Powered Sustainable Insights to Investors

Rockset

AUGUST 27, 2020

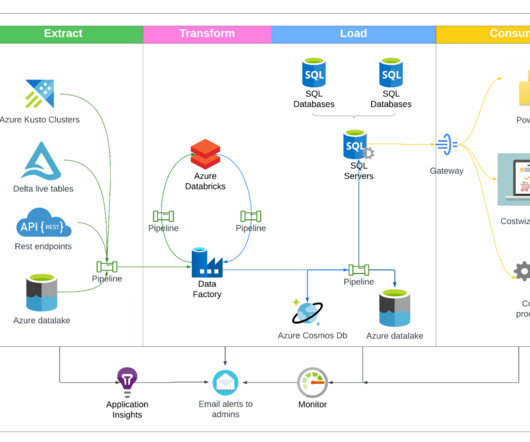

In several of these scenarios, both NoSQL databases and data lakes have been very useful because of their schemaless nature, variable cost profiles and scalability characteristics. This allows us to correct bad predictions made by the AI via our custom tagging app, tapping into the latest data ingested in our pipeline.

Let's personalize your content