AI Implementation: The Roadmap to Leveraging AI in Your Organization

Ascend.io

JANUARY 10, 2024

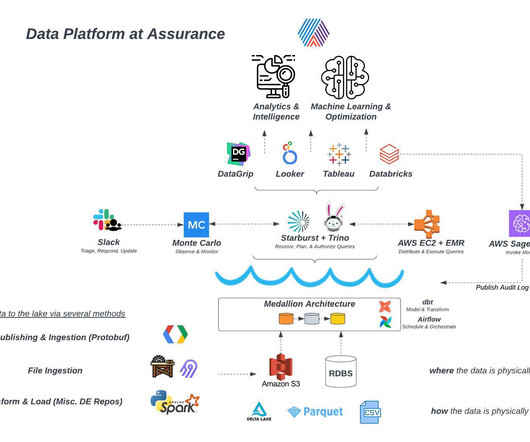

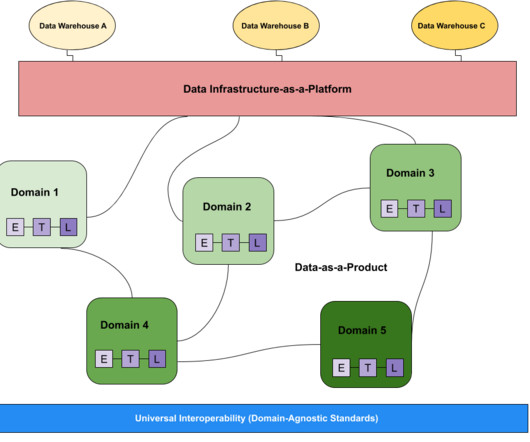

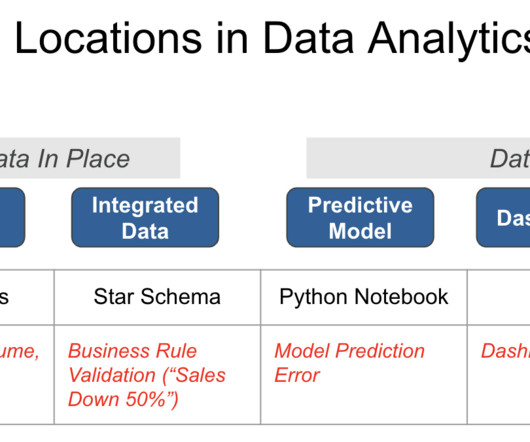

Visual representation of Conway’s Law ( source ) Read More: The Chief AI Officer: Avoid The Trap of Conway’s Law Process: Ensuring Data Readiness The backbone of successful AI implementation is robust data management processes. AI models are only as good as the data they consume, making continuous data readiness crucial.

Let's personalize your content