Toward a Data Mesh (part 2) : Architecture & Technologies

François Nguyen

MARCH 22, 2021

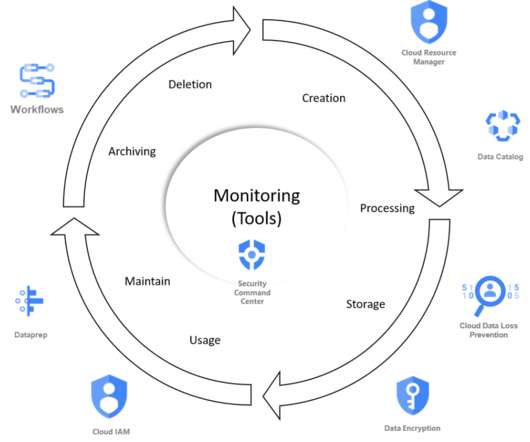

TL;DR After setting up and organizing the teams, we are describing 4 topics to make data mesh a reality. It will be illustrated with our technical choices and the services we are using in the Google Cloud Platform. There are certainly many other ways to do the same with another technologies.

Let's personalize your content