Data Teams and Their Types of Data Journeys

DataKitchen

OCTOBER 2, 2023

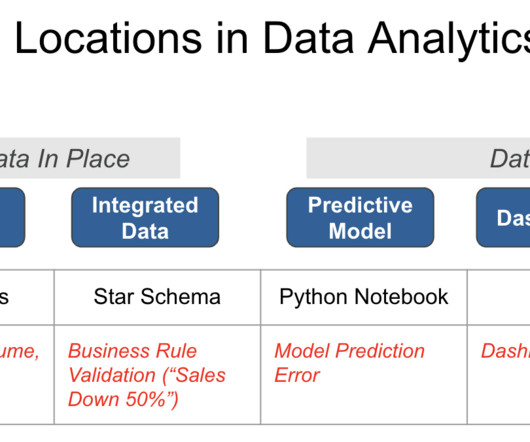

This lack of control is exacerbated by many people and/or automated data ingestion processes introducing changes to the data. This creates a chaotic data landscape where accountability is elusive and data integrity is compromised. The Hub Data Journey provides the raw data and adds value through a ‘contract.

Let's personalize your content